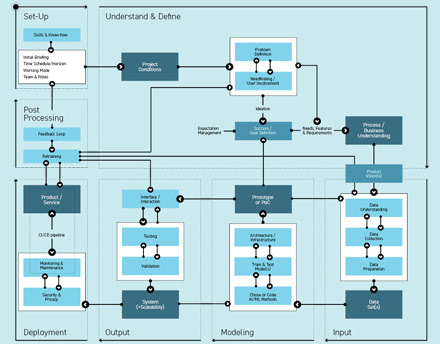

If the ‘Modeling’ module successfully leads to a (high-fidelity) prototype, PoC or MVP the next module is related to the output.

1. Intelligent User Interfaces (IUI’s)

To move on and for a final solution, the modeling results from the backend need to be displayed in a frontend. This can be an app, a dashboard, any other interface. Since AI/ML infused systems are based on statistics and the probability of coming up with their conclusions, their behavior implies a certain level of uncertainty. The AI/ML system might also change due to its ability to learn and adapt to the users’ behavior over time. Building and maintaining trust under these circumstances is a key factor in AI/ML system behavior. A couple of activities can support the team in providing the user(s) with the information needed to judge whether or not the system’s output is trustworthy. AI/ML solutions turn the concept of a static interface into smart interactive operations. (18) HAX Toolkit from Microsoft and the (19) People+AI Guidebook from Google can be a helpful set of advice for this activity.

+ Transparency & Explainability: Making clear what the system can and can- not do, as well as articulating the data sources are necessary steps in that regard. Two directions can guide this activity, general system explanations; explaining how the AI system works in general terms (e.g. types of data used, what the system is optimizing for, and how the system was trained),

and specific output explanations; explaining why the AI provided a particu- lar output at a particular time (e.g. confidence scores (categorical, n-best classifications, numeric confidence level(s))).

+ Failure: Identifying a) user, b) system, and c) context errors. User errors occur when users use the solution in an unintended way, so trying to make failure safe, boring, and a natural part of the product. Avoiding making dangerous failures interesting, or over-explaining system vulnerabilities which can incentivize users to reproduce them. System errors occur when the system does not work or can’t provide the right answer, or any answer at all, due to inherent limitations to the system. Context errors occur when user expectations and system assumptions are mismatched, e.g. an AI/ML system makes incorrect assumptions about the user (true positives or true negatives) (20) error message guidelines can support these activities.

+ Feedback: Feedback in AI is perceived as a loop of continuous learning and improvement. It should be used to improve the AI/ML system, making sure that the feedback signal being collected can actually be used to improve the model. This means that the feedback needs to present a structure that can be translated into data points that the AI/ML algorithm can benefit from. There is a difference between implicit and explicit feedback. Implicit feedback is collected while the solution is being used. It is stored in log files. Explicit feedback is actively provided by the user(s). It is therefore necessary to communicate value and time to have an impact.

+ Human-in-the-loop: The concept of the human in the loop is letting the user decide if/when to opt out. The user should be able to edit system settings (e.g. data collection) and take over control. This is strongly related to the Human-Centered-Design approach (controllability).

+ non-visual UI’s: The actions above are helpful and necessary for AI/ML systems that have a user interface. Sometimes the AI/ML system just generates a numerical output that is directly fed into a database. Even so, feedback from the user is necessary and the team needs to find a way to collect it. Gaining trust is a very tough task here since it is hardly possible to incorporate transparency and explainability features to the user(s). Most probably, use over time and an accurate AI/ML output will establish trust amongst the user(s). Marketing and communication activities can support this process.

+ Interaction: Intelligent AI/ML based systems also modify the digital interface through which users interact with digital systems. The input can be based on natural language, gesture, mimic or visual representations such as pictures and video. Effective, intuitive and natural interaction enhancing the user experience needs to be established. interactive Machine Learning (iML) is an area of research that tries to offer a solution to this challenge. As an example, IBM offers (21) AI conversation guidelines.

2. Testing

+ Detect & check for outliers and errors: Mislabeled or misclassified results, poor inference or incorrect choice of ML model and related settings, missing or incomplete data can be reasons for a poor systems performance. It is therefore necessary to check the output quality for relevance errors and disambiguate systems hierarchy errors.

+ Usability testing: Only a small number of users will interact with the system as initially planned by the development team. Investing in beta-testing and conducting pilot programs is necessary to spot unintended system dead ends and negative user experiences. User testing, observation, A/B testing, are typical actions that are used within this area.

+ Detect & check for outliers and errors: Mislabeled or misclassified results, poor inference or incorrect choice of ML model and related settings, missing or incomplete data can be reasons for a poor systems performance. It is therefore necessary to check the output quality for relevance errors and disambiguate systems hierarchy errors.

+ Usability testing: Only a small number of users will interact with the system as initially planned by the development team. Investing in beta-testing and conducting pilot programs is necessary to spot unintended system dead ends and negative user experiences. User testing, observation, A/B testing, are typical actions that are used within this area.

3. Validation

+ Check success criteria: Monitoring accuracy and performance over time and with different use cases and users to have more chance of uncovering issues with the system.

+ Check expectations: Evaluating if the expectations that were set at the beginning are still valid or need to be adjusted.

+ Check success criteria: Monitoring accuracy and performance over time and with different use cases and users to have more chance of uncovering issues with the system.

+ Check expectations: Evaluating if the expectations that were set at the beginning are still valid or need to be adjusted.

This module implies a lot of actions that are related to HCD/UX design tasks. Close collaboration with data scientists and ML engineers to predict system behavior and potential functionality on a positive, as well as a negative spectrum need to be taken account of and made visible and reasonable for the user(s). The turn from static interfaces to smart and dynamic interactions represents a new design material. Potential outcomes and outputs are too complex to be planned in very detail. Failure and malfunction are inevitable and need to be incorporated as a design feature.

Outcome: System (+ Scalablity)

‘Input’, ‘Modeling’ and ‘Output’ modules are strongly interdependent and a couple of iterations will be needed to reach the status of an AI/ML system that is accurate, usable and time and cost efficient (combining business viability, technological feasibility and user desirability).

If the AI/ML system is very error prone and transparency and explainability are hard to achieve, it is necessary to start over with the ‘Modeling’ module, which might guide the team back to the ‘Input’ module. If test results show a bad user experience, it is necessary to start over with the ‘Output’ module and redesign the interface/interaction.

If the outcome of this module results in an AI/ML solution which produces stable and accurate output, on small, as well as larger data sets, and the user interface supports its user in reaching his/her goal and trust in the systems output and the business domain experts perceive it as a value addition, either from a time or cost efficient perspective, the transfer from a PoC to a productive system can be made. Scalability refers to the notion that an implemented system, in a best case scenario, could be transferred to similar use cases or other business units and problems as well.